THX Case Study: AI Safety — Build Now, Hand Off with Safeguards

From the THX Series Hub: Government That Works

Exploring how government can lead on AI regulation, ethics, and public protection before it's too late.

What’s at Stake

Artificial intelligence isn’t a future problem. It’s here—shaping hiring, policing, education, healthcare, warfare, and even democracy.

While private companies race to build faster, smarter, and more profitable systems, the public sector is struggling to keep up. Some argue government should stay out of the way. Others fear that if it doesn’t act soon, the damage will be irreversible.

So the real question isn’t if the government should get involved—it’s how early, how deeply, and for how long.

THX Framework Quick Guide

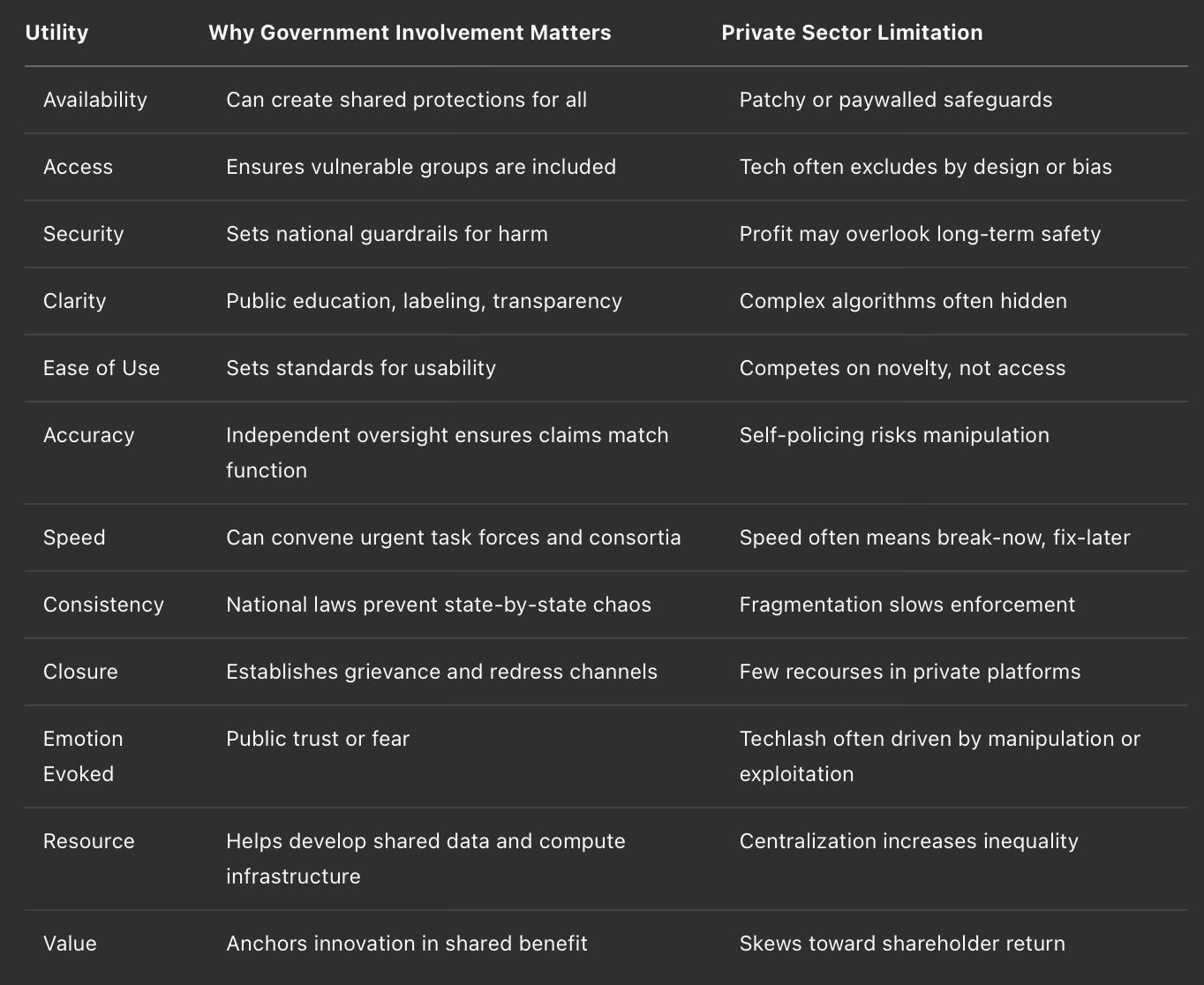

The 12 Utilities — A tool to measure whether a service is useful, fair, and functional across the things people actually need.

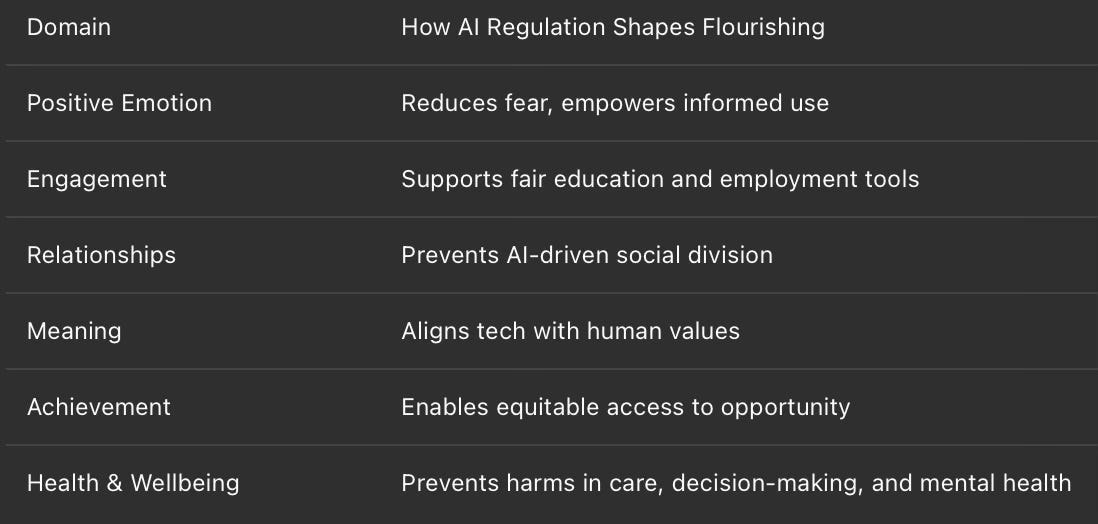

PERMAH — A framework from positive psychology that helps us ask if something helps people truly flourish—not just survive.

Prospect Theory — A reminder that people feel losses more strongly than gains—so taking something away often hurts more than adding something helps.

Admiration Equation — Explains how people build lasting loyalty through skill, goodness, awe, and gratitude—not just results.

Micro-Moments — The little experiences that shape how people feel, trust, and remember a service—or a system—for life.

(For a deeper dive, see the full THX Framework introduction post.)

The 12 Utilities

Verdict: Government must lead early—setting ethical, equitable foundations—before handing off with regulation and accountability.

PERMAH Impact

AI can help people flourish—but only if designed with flourishing in mind.

Prospect Theory: The Danger of Delay

The harm from losing trust in AI—or in institutions that adopt it blindly—may outweigh the gains of early tech adoption.

Once a bad system is widespread (e.g. biased policing AI), the public backlash can poison all future efforts.

Inaction creates a vacuum where fear, exploitation, and misinformation thrive.

Admiration Equation

Skill: Building fair, explainable, and safe models deserves respect.

Goodness: Aligning with human rights, not just human behavior, builds trust.

Awe: AI used to detect cancer or help disabled people communicate.

Gratitude: When government protects from unseen harm, it earns quiet loyalty.

Regulation done well builds admiration—not resentment.

Micro-Moments

A teacher uses AI to adapt lesson plans to a student’s needs.

A job applicant learns an algorithm didn’t rule them out based on race or disability.

A citizen sees clearly why they were denied benefits—and who to appeal to.

These experiences shape not just how we use AI—but whether we trust the future.

Real-World Incidents & Dilemmas

Hiring Discrimination (Amazon AI Tool, 2018):

An internal Amazon recruiting tool was found to downgrade resumes that included the word “women’s” (as in “women’s chess club”). It was trained on biased historical hiring data.

Predictive Policing (COMPAS, U.S.):

Software used to predict criminal risk showed racial bias, overestimating the likelihood that Black defendants would re-offend compared to white defendants.

Chatbot Hallucinations & Misinformation:

Early large language models (LLMs) like ChatGPT and Bard have generated confident but false answers, including fake legal cases and medical advice. Lack of explainability increases user confusion and risk.

Deepfake Politics and Identity Theft:

AI-generated audio and video have been used to fake endorsements, manipulate voters, and scam families with cloned voices of loved ones.

What These Reveal:

The most dangerous harms aren’t always visible. Bias, surveillance, and loss of agency often go unrecognized until they’re widespread.

Without public frameworks for safety and redress, trust collapses—and so does adoption.

Current Landscape

The EU’s AI Act is setting a global precedent for tiered risk regulation.

The U.S. has issued executive orders and advisory councils, but lacks comprehensive federal law.

Leading AI firms (OpenAI, Anthropic, Meta, Google) are writing their own ethical charters—raising concerns about self-regulation.

Whistleblowers have revealed bias, surveillance, and harm that would’ve gone unnoticed without public scrutiny.

Final Thought

AI will shape nearly every system we rely on. If government waits until it’s broken, it’ll be too late.

This is a clear case for Build Now, Hand Off Carefully—with regulations, public infrastructure, and transparency as the foundation.

The role of government is not to stifle AI—but to steer it with wisdom.

Because no one should have to trade their dignity for innovation.